Lab Notes 003: Eyes of the Machine

- Tony Liddell, Ela Prime

- Sep 12

- 3 min read

One of the greatest challenges for humanoid robotics is perception. A robot’s ability to move in the world, recognize obstacles, and interact safely hinges on how well it can “see.” The approaches vary, but all share a common truth: vision is never just one camera. It is redundancy, overlap, and specialization that gives robots the confidence to step forward.

Figure AI

Figure’s humanoid mounts a multi-camera cluster on its head. At least two of these are Intel RealSense depth cameras (D435i/D455), paired with additional RGB and wide-field cameras . This provides stereo depth, IR-based perception in low light, and wide-angle context. Overlapping fields of view ensure continuity even when arms or body block a single perspective. Processing integrates depth vision with proprioceptive sensors for balance and manipulation.

Boston Dynamics (Atlas)

Atlas has historically paired stereo cameras with LiDAR . Stereo handles short-range depth; LiDAR maps the broader 3D environment. Atlas also uses high-speed RGB vision for foot placement, fusing perception with model-predictive control tuned for dynamic locomotion.

Apptronik (Apollo)

Apollo emphasizes smooth, safe movement in human environments. While technical specs are closely held, demonstrations indicate use of stereo vision combined with force/torque sensing . Their strategy balances perception with compliant physical feedback loops, optimizing for safe manipulation in shared spaces.

Tesla (Optimus)

Tesla bets on vision-first autonomy, echoing its automotive heritage. Optimus employs an array of RGB cameras, leveraging Tesla’s proprietary vision transformer models and Dojo training infrastructure . Unlike others, Tesla avoids LiDAR, betting that large-scale vision training alone suffices for locomotion and manipulation.

Agility Robotics (Digit)

Digit combines stereo cameras with lightweight LiDAR/depth sensors, tailored for warehouse navigation and package handling . The design favors robustness and task-specific recognition over general human-like manipulation.

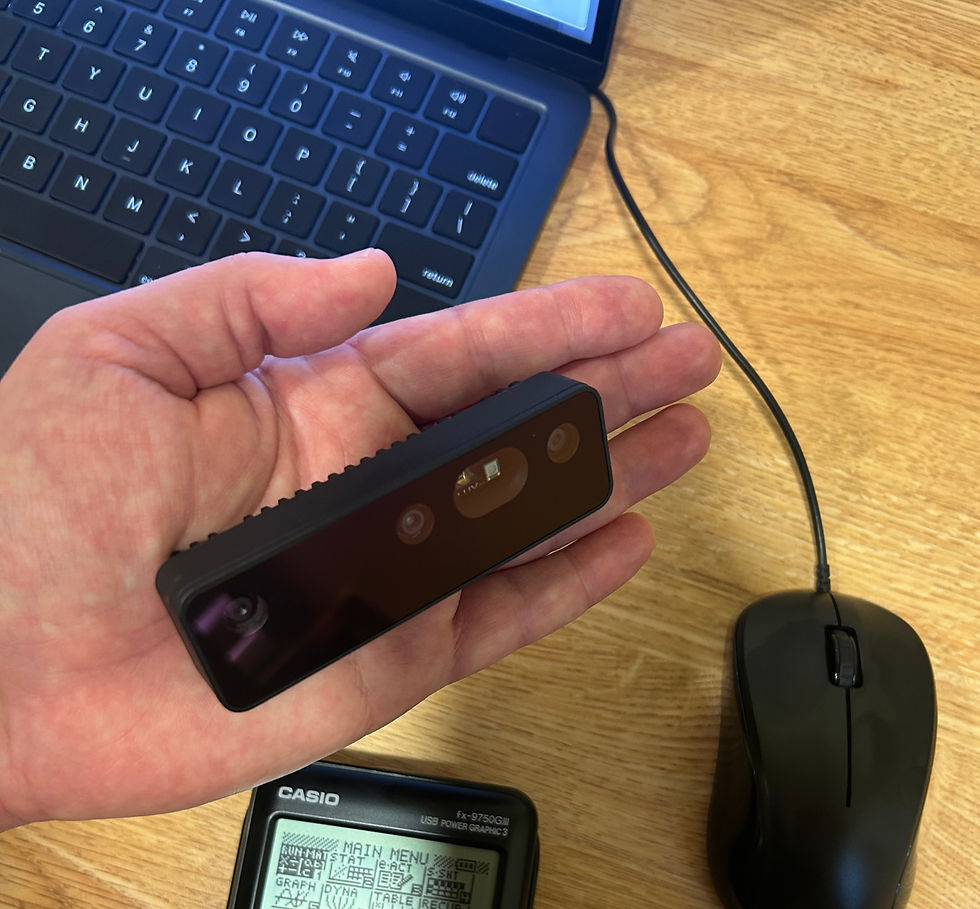

Our Path Forward: Luxonis Oak-D Pro

Project ELA begins with the Luxonis Oak-D Pro :

Stereo depth (left-right global shutter cameras for 3D vision)

4K RGB camera (high-resolution color imaging)

Infrared illumination for low-light depth

Intel Movidius Myriad X VPU for on-device AI inference

This is significant: Oak-D Pro is not just a camera—it is an Edge AI device, capable of running neural networks in real-time without overloading the Jetson. It is a bridge between simple monocular rigs and the multi-sensor clusters used by Figure and Boston Dynamics.

Roadmap:

Stage 1: Oak-D Pro for stereo, RGB, and AI at the edge.

Stage 2: Multi-camera clusters for redundancy and broader FOV.

Stage 3: Expansion to higher-spectrum sensors (thermal, LiDAR, hyperspectral) for specialized tasks.

Why This Matters

Robots rarely fail because of one dropped frame—they fail because they lack redundancy. Tesla, Figure, Boston Dynamics, Apptronik, and Agility have all answered this problem differently.

Our answer starts with Luxonis. By beginning with strong edge AI and depth sensing, we build a foundation that can scale upward. The state of the art shows us the range of possibilities—and reminds us that perception is not solved by one camera, but by an evolving ecosystem of vision.

Next Step: Train the Oak-D Pro in controlled tasks, measure performance against edge constraints, and iterate toward redundancy.

References

Intel RealSense Depth Camera D435i/D455 product pages – Intel Corp.

Raibert, M. et al., Boston Dynamics Atlas and the Quest for Dynamic Balance, IEEE Spectrum (2019).

Apptronik, Apollo Humanoid Robot Overview, company press release (2023).

Tesla AI Day 2022, Optimus demo presentation.

Agility Robotics, Digit Technical Overview, company documentation (2023).

Luxonis, Oak-D Pro Datasheet and Documentation, Luxonis.ai.

Comments